VOSS Automate Deployment Topologies#

Tutorial: videocam

If you found this video helpful, you can find more at Tutorials Home.

Overview#

Architecturally, VOSS Automate offers two main deployment topologies:

In addition, the following options are available:

Cloud deployments

VOSS Automate Cloudv1

Unified Node Cluster Topology#

VOSS Automate’s Unified Node Cluster topology comprises these options:

Single-node cluster (cluster-of-one/standalone)

Single-node cluster (cluster-of-one/standalone) with VMWare HA

2 Node with Web proxies

4 Node with Web proxies

6 Node with Web proxies

Important

Choose between a Unified Node deployment or a Modular Architecture deployment.

In a Unified Node deployment, VOSS Automate is deployed either as a single node cluster, 2 unified nodes, or a cluster of multiple nodes with High Availability (HA) and Disaster Recovery (DR) qualities.

Each node can be assigned one or more of the following functional roles:

WebProxy - load balances incoming HTTP requests across unified nodes.

Single node cluster - combines the Application and Database roles for use in a non-multi-clustered test environment.

Unified - similar to the Single node cluster role Application and Database roles, but clustered with other nodes to provide HA and DR capabilities

The nginx web server is installed on the WebProxy, Single node cluster, and Unified node, but is configured differently for each role.

In a clustered environment containing multiple Unified nodes, a load balancing function is required to offer HA (High Availability providing failover between redundant roles).

VOSS Automate supports deployment of either the WebProxy node or a DNS load balancer. Here are some considerations in choosing a WebProxy node vs. DNS:

The Proxy takes load off the Unified node to deliver static content (HTML/JAVA scripts). When using DNS or a third-party load balancer, the Unified node has to process this information.

DNS does not know the state of the Unified node.

The WebProxy detects if a Unified node is down or corrupt. In this case, the WebProxy will select the next Unified node in a round robin scheme.

We recommend that you run no more than two Unified nodes and one WebProxy node on a physical server (VMware server). Also recommended is that the disk subsystems be unique for each Unified node.

The following deployment topologies are defined:

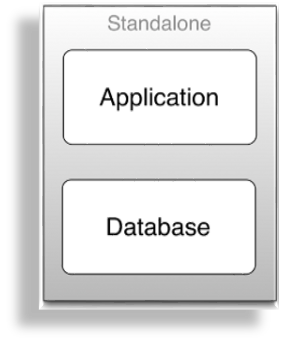

Test: a standalone, single node cluster with Application and Database roles combined. No High Availability/Disaster Recovery (HA/DR) is available.

Important

This deployment should be used for test purposes only.

Production with Unified Nodes: in a clustered system, comprising:

2, 3, 4 or 6 Unified nodes (each with combined Application and Database roles)

0 to 4 (maximum 2 if 2 Unified nodes) WebProxy nodes offering load balancing. The WebProxy nodes can be omitted if an external load balancer is available.

Single-node cluster (cluster-of-one/standalone)#

A single-node cluster (cluster-of-one/standalone) deployment should be used for test purposes only.

The table describes the advantages and disadvantages of a single-node cluster (cluster-of-one/standalone) deployment topology:

Advantages |

Disadvantages |

|---|---|

|

|

Single-node cluster (cluster-of-one/standalone) with VMWare HA#

The table describes the advantages and disadvantages of a single-node cluster (cluster-of-one/standalone) with VMWare HA deployment topology:

Advantages |

Disadvantages |

|---|---|

|

|

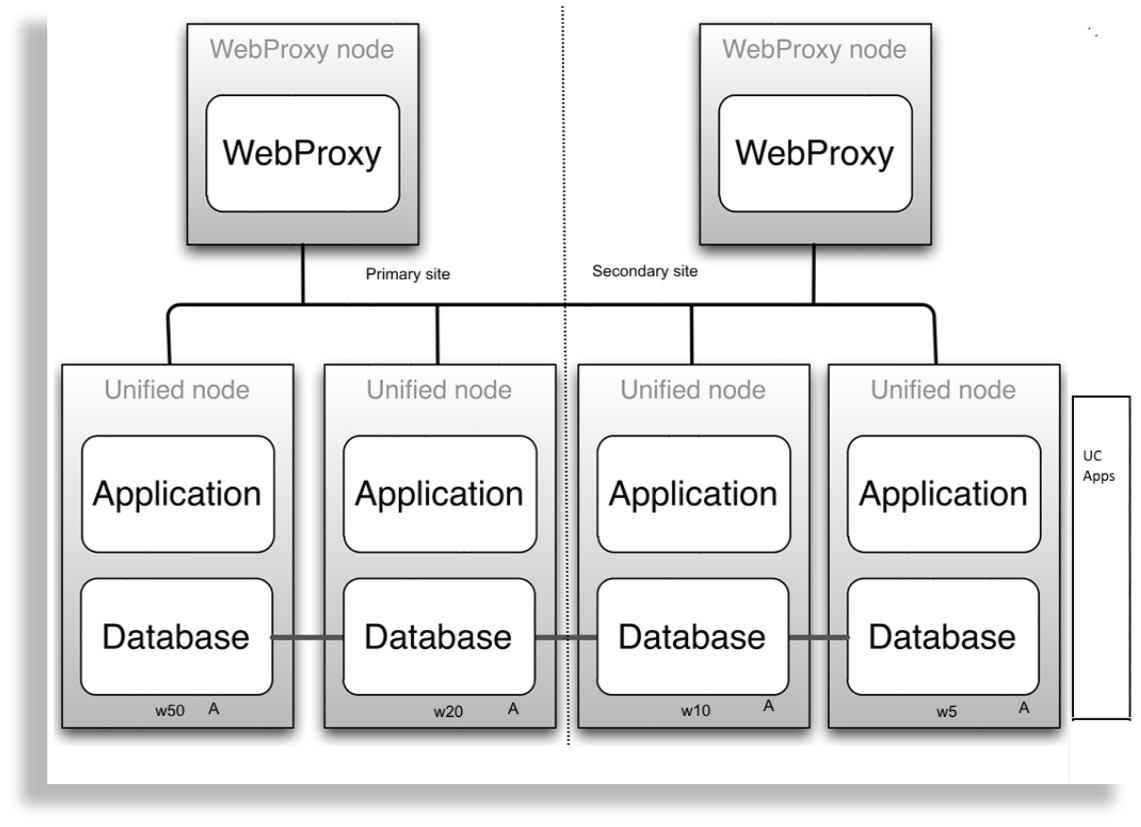

Multinode Cluster with Unified Nodes#

To achieve Geo-Redundancy using the Unified nodes, consider the following:

Either four or six Unified nodes - each node combining Application and Database roles - are clustered and split over two geographically disparate locations.

Two Web Proxy nodes to provide High Availability that ensure an Application role failure is gracefully handled. More may be added if Web Proxy nodes are required in a DMZ.

It is strongly recommended not to allow customer end-users the same level of administrator access as the restricted groups of provider- and customer administrators. This is why Self-service web proxies as well as Administrator web proxies should be used.

Systems with Self-service only web proxies are only recommended where the system is customer facing, but where the customer does not administer the system themselves.

Web Proxy and Unified nodes can be contained in separate firewalled networks.

Database synchronization takes places between all Database roles, thereby offering Disaster Recovery and High Availability.

For 6 unified nodes, all nodes in the cluster are active. For an 8 node cluster (with latency between data centers greater than 10ms) , the 2 nodes in the DR node are passive, in other words, the voss workers 0 command has been run on the DR nodes.

Primary and fall-back Secondary Database servers can be configured manually. Refer to the Platform Guide for further details.

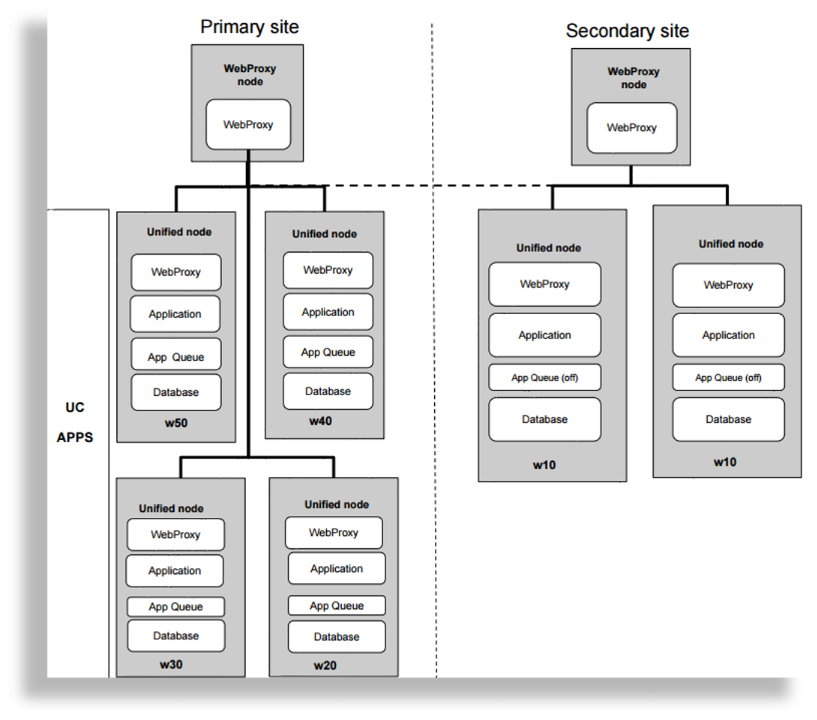

Example: 6-Node Cluster

The diagram illustrates an example of a 6-Node Cluster:

Example: 8 Node Cluster

The diagram illustrates an example of an 8-Node Cluster:

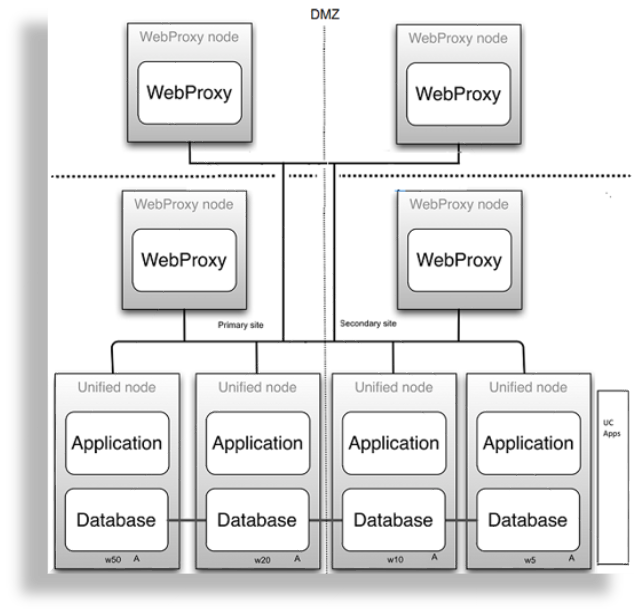

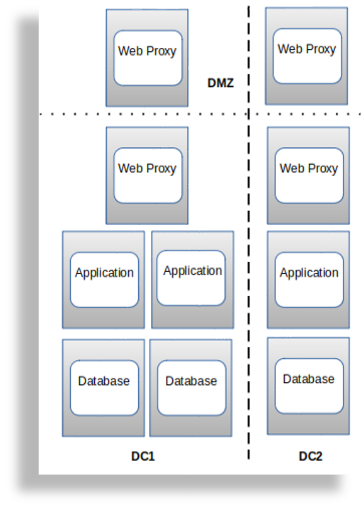

Example: 2 Web Proxy Nodes in a DMZ

The diagram illustrates an example of 2 Web proxy nodes in a DMZ:

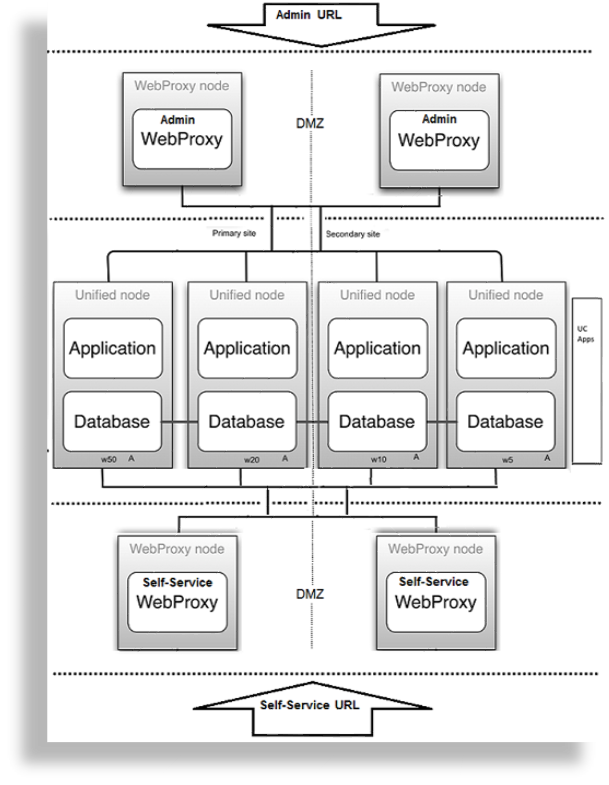

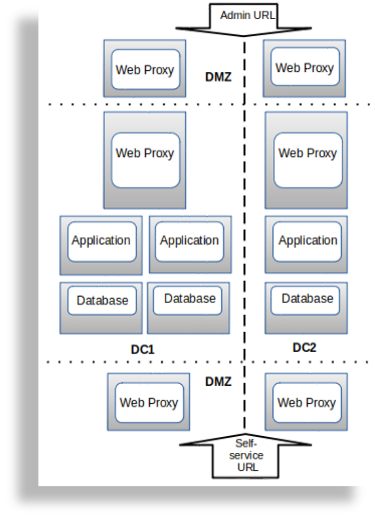

Example: 4 Web Proxy Nodes in a DMZ (2 admin, 2 Self-service)

The diagram illustrates an example of 4 Web proxy nodes (2 admin, and 2 Self-service) in a DMZ:

2 Node Cluster with Unified Nodes#

To achieve Geo-Redundancy using the Unified nodes, consider the following:

Two unified nodes - each node combining application and database roles - are clustered and optionally split over two geographically disparate locations.

(Optional) Two web proxy nodes can be used. It may be omitted if an external load balancer is available.

Web proxy and unified nodes can be contained in separate firewalled networks.

Database synchronization takes place from primary to secondary unified nodes, thereby offering Disaster Recovery if the primary node fails.

If the secondary unified node has more than 10ms latency with the primary unified node, it must be configured to be in the same geographical location.

Important

With only two Unified nodes, with or without Web proxies, there is no High Availability. The database on the primary node is read/write, while the database on the secondary is read only.

Only redundancy is available.

If the primary node fails, a manual delete of the primary node on the secondary and a cluster provision will be needed.

If the secondary node fails, it needs to be replaced.

Refer to the topic on DR Failover and Recovery in a 2 Node Cluster in the Platform Guide.

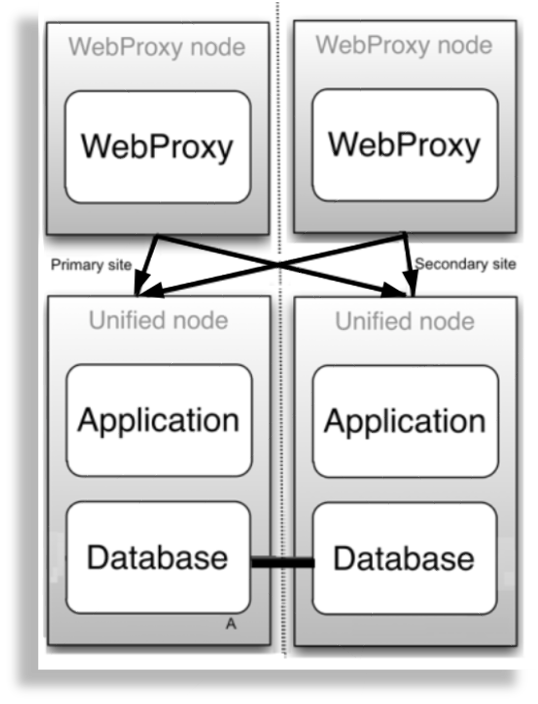

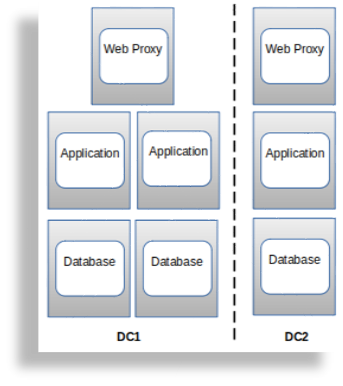

Example: 2 Node Cluster

The diagram illustrates a 2 Node Cluster:

4 Node with Web Proxies#

The table describes the advantages and disadvantages of a 4 Node with Web Proxies deployment topology:

Advantages |

Disadvantages |

|---|---|

|

|

6 Node with Web Proxies#

A 6 Node with Web proxies deployment topology:

Is typically deployed for multi-data center deployments

Supports Active/Standby

Modular Cluster Deployment Topology#

Overview#

A Modular Cluster topology has separate Application and Database nodes:

3 Database nodes

1 - 8 Application Nodes

Web Proxies

A Modular Cluster topology has the following advantages:

Increased processing capacity

Horizontal scaling by adding more Application nodes

Improved database resilience with dedicated nodes and isolation from application

Improved database performance by removing application load from the primary database

Important

Choose between a Unified Node Cluster deployment or a Modular Cluster deployment.

VOSS Automate is deployed as a modular cluster of multiple nodes with High Availability (HA) and Disaster Recovery (DR) qualities.

Each node can be assigned one or more of the following functional roles:

WebProxy - load balances incoming HTTP requests across nodes.

Application role node, clustered with other nodes to provide HA and DR capabilities

Database role node, clustered with other nodes to provide HA and DR capabilities

The nginx web server is installed on the WebProxy and application role node, but is configured differently for each role.

Related Topics

* Modular Architecture Multinode Installation

* Migrate a Unified Node Cluster to a Modular Cluster

A load balancing function is required to offer HA (High Availability providing failover between redundant roles).

VOSS Automate supports deployment of either the WebProxy node or a DNS load balancer. Consider the following when choosing a WebProxy node vs. DNS:

The Proxy takes load off the application role node to deliver static content (HTML/JAVA scripts). When using DNS or a third-party load balancer, the application role node has to process this information.

DNS does not know the state of the application role node.

The WebProxy detects if an application role node is down or corrupt. In this case, the WebProxy will select the next application role node in a round robin scheme.

We recommend that you run no more than one application role node and one database role node and one WebProxy node on a physical server (VMware server). When selecting disk infrastructure, high volume data access by database role replica sets must be considered where different disk subsystems may be required depending on the performance of the disk infrastructure.

The following modular cluster topology is recommended (minimum):

Important

Single node cluster topologies are not available for modular cluster deployments.

Production with nodes: in a clustered system of 2 data centers:

DC1 = primary data center containing primary database node (highest database weight)

DC2 = data recovery data center

The system comprises of the following nodes:

3 nodes with application roles (2 in DC1; 1 in DC2)

3 nodes with database roles (2 in DC1; 1 in DC2)

Maximum 2 WebProxy nodes if 2 data centers; offering load balancing. The WebProxy nodes can be omitted if an external load balancer is available.

Multinode Modular Cluster with Application and Database Nodes#

To achieve Geo-Redundancy using Application and Database nodes, consider the following:

Six Application and Database nodes - 3 nodes with an application role and 3 nodes with a database role - are clustered and split over two geographically disparate locations.

Two Web Proxy nodes to provide High Availability that ensure an Application role failure is gracefully handled. More may be added if Web Proxy nodes are required in a DMZ.

It is strongly recommended not to allow customer end-users the same level of administrator access as the restricted groups of provider- and customer administrators. This is why Self-service web proxies as well as Administrator web proxies should be used.

Systems with Self-service only web proxies are only recommended where the system is customer facing, but where the customer does not administer the system themselves.

Web Proxy, application and database nodes can be contained in separate firewalled networks.

Database synchronization takes places between all database role nodes, thereby offering Disaster Recovery and High Availability.

All nodes in the cluster are active.

Primary and fall-back Secondary Database servers can be configured manually. Refer to the Platform Guide for further details.

Example: 6 Node Cluster

The diagram illustrates an example of a 6 Node Cluster:

Example: 2 Web Proxy Nodes in a DMZ

The diagram illustrates an example of 2 Web Proxy Nodes in a DMZ:

Example: 4 Web Proxy Nodes in a DMZ

The diagram illustrates an example of 4 Web Proxy Nodes in a DMZ (2 admin, 2 Self-service):

Cloud Deployments#

VOSS Automate supports the following Cloud deployments:

Azure

Google Cloud Platform (GCP)

Support all Standalone, Unified and Modular cluster topologies

The advantages of a Cloud deployment topology:

Leverage cloud tooling, such as proxies (which can be used instead of VOSS Web Proxy)

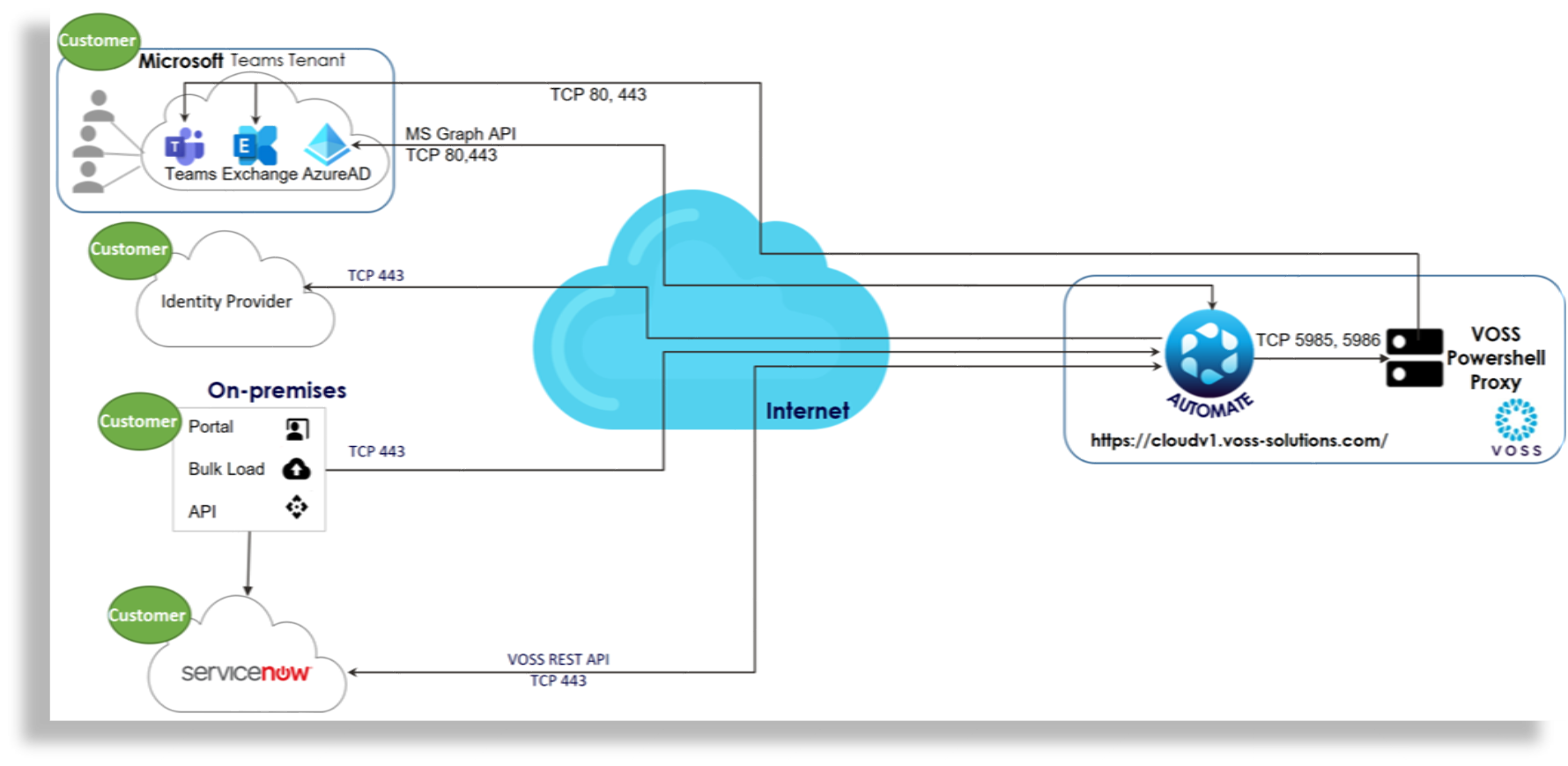

VOSS Automate Cloudv1 (SaaS)#

VOSS Automate Cloudv1 is a Software-as-a-Service (SaaS) offering hosted on a shared VOSS Automate instance within Microsoft Azure.

VOSS manages this instance, which seamlessly integrates with a customer’s Unified Communications (UC) platform, Microsoft Exchange, Microsoft Active Directory, and third-party applications like ServiceNow and Identity Providers (IdPs) for Single Sign-On (SSO) authentication.