Multinode Cluster with Unified Nodes¶

In order to achieve Geo-Redundancy using the Unified nodes, you need the consider the following:

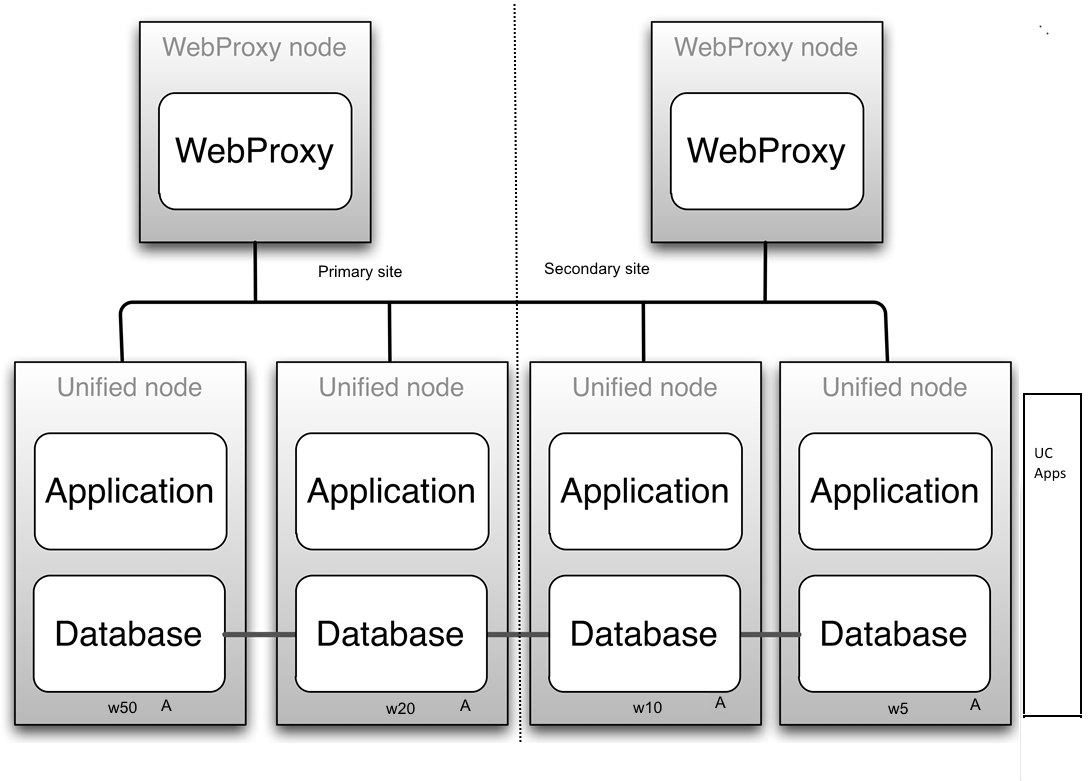

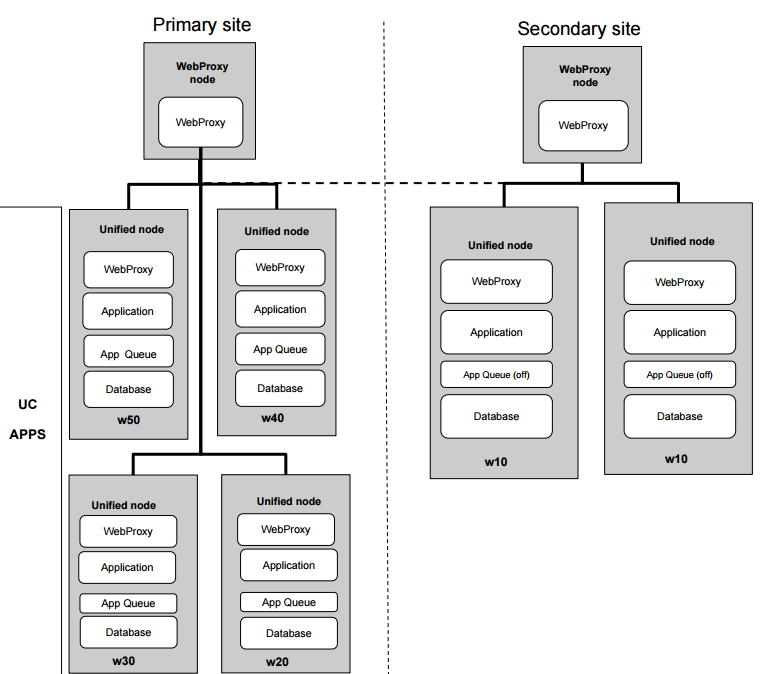

Either four or six Unified nodes - each node combining Application and Database roles - are clustered and split over two geographically disparate locations.

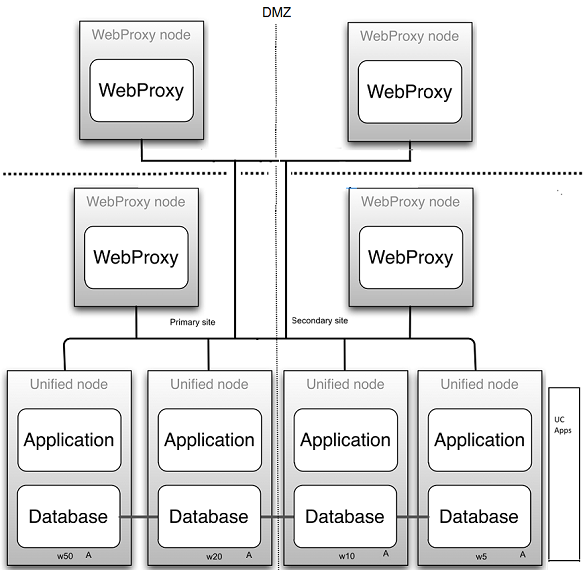

Two Web Proxy nodes to provide High Availability that ensure an Application role failure is gracefully handled. More may be added if Web Proxy nodes are required in a DMZ.

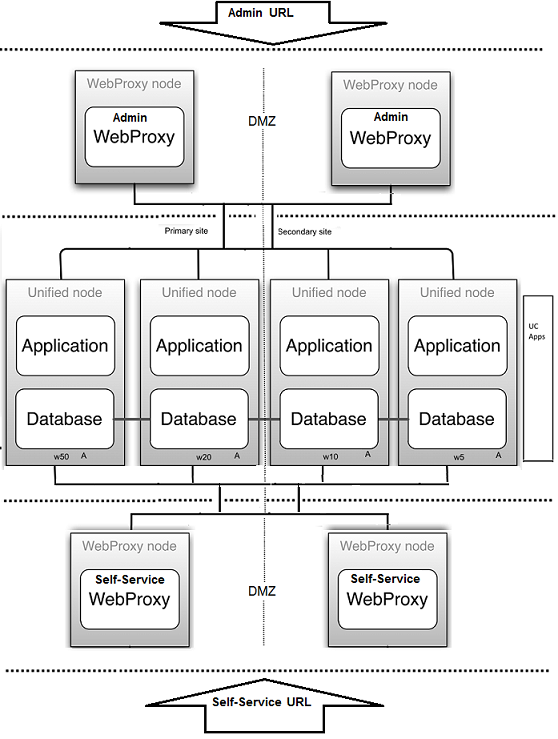

It is strongly recommended not to allow customer end-users the same level of administrator access as the restricted groups of provider- and customer administrators. This is why Self-service web proxies as well as Administrator web proxies should be used.

Systems with Self-service only web proxies are only recommended where the system is customer facing, but where the customer does not administer the system themselves.

Web Proxy and Unified nodes can be contained in separate firewalled networks.

Database synchronization takes places between all Database roles, thereby offering Disaster Recovery and High Availability.

For 6 unified nodes, all nodes in the cluster are active. For an 8 node cluster (with latency between data centers greater than 10ms) , the 2 nodes in the DR node are passive, in other words, the voss workers 0 command has been run on the DR nodes.

Primary and fall-back Secondary Database servers can be configured manually. Refer to the Platform Guide for further details.

The diagrams in this section illustrate:

- the six node cluster

- the eight node cluster

- 2 Web Proxy nodes in a DMZ

- 4 (2 admin, 2 Self-service) Web Proxy nodes in a DMZ